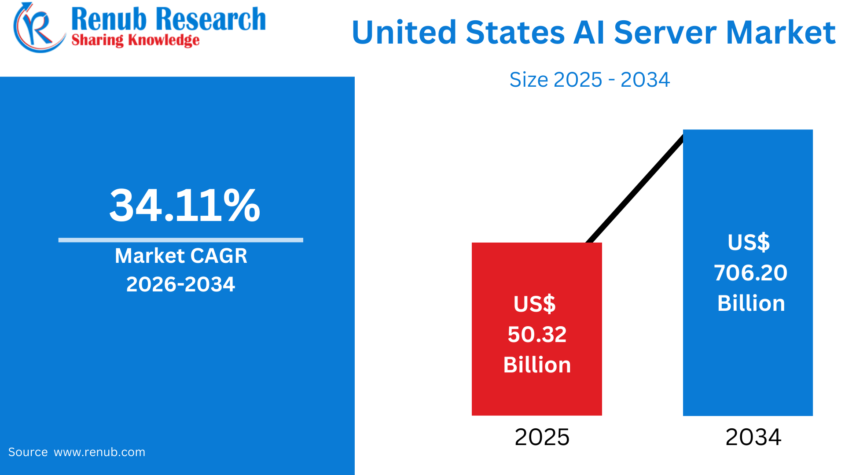

United States AI Server Market Size & Forecast 2026–2034

According to Renub Research United States AI server market is entering a period of extraordinary expansion as artificial intelligence transitions from experimental use cases to large-scale, production-critical deployments. The market is projected to grow from US$ 50.32 billion in 2025 to US$ 706.20 billion by 2034, recording a robust compound annual growth rate (CAGR) of 34.11% during 2026–2034.

This dramatic growth is fueled by the rapid proliferation of generative AI, large language models (LLMs), high-performance computing (HPC), and big data analytics. As AI becomes a foundational technology across industries, demand for advanced GPU- and accelerator-based server infrastructure is surging across hyperscale data centers, cloud platforms, enterprise IT environments, and research institutions throughout the United States.

Download Free Sample Report:https://www.renub.com/request-sample-page.php?gturl=united-states-ai-server-market-p.php

United States AI Server Market Overview

An AI server is a specialized high-performance computing system designed specifically to process artificial intelligence workloads such as machine learning, deep learning, natural language processing, computer vision, and generative AI. Unlike traditional enterprise servers, AI servers integrate powerful accelerators—including GPUs, TPUs, FPGAs, or ASICs—that enable massive parallel computation and rapid processing of large datasets.

These servers are equipped with high-bandwidth memory, ultra-fast storage, and advanced networking technologies to support distributed model training and real-time inference at scale. AI servers are deployed extensively in hyperscale data centers, cloud platforms, enterprise data centers, academic research facilities, and government infrastructure.

The United States leads global AI server adoption due to its dominance in artificial intelligence research, cloud computing, semiconductor manufacturing, and digital infrastructure. Technology giants, cloud service providers, startups, and research laboratories rely heavily on AI servers to train foundation models, perform large-scale analytics, and automate complex workflows. As AI adoption accelerates in healthcare, finance, retail, defense, manufacturing, and autonomous systems, AI servers are becoming a core pillar of the U.S. digital economy.

Growth Drivers in the United States AI Server Market

Explosion of AI Adoption Across Industries

The most significant driver of the U.S. AI server market is the rapid adoption of AI across nearly every major industry vertical. Enterprises, cloud providers, and startups are deploying AI workloads for recommendation engines, fraud detection, predictive maintenance, customer personalization, and generative AI applications. This shift is dramatically increasing demand for high-performance servers capable of supporting large models and low-latency inference.

Digital transformation initiatives across finance, retail, manufacturing, and government are moving beyond pilot projects into full production environments. This transition requires resilient, scalable AI infrastructure rather than limited experimental systems. Simultaneously, the exponential growth of data from IoT devices, mobile applications, and enterprise platforms is pushing organizations to deploy in-house AI servers for faster processing, enhanced security, and reduced dependency on shared cloud resources.

In 2023, NVIDIA Corporation shipped approximately 3.76 million data-center GPUs, capturing a dominant share of the U.S. data-center GPU market. The architectural suitability of GPUs for AI workloads and NVIDIA’s ecosystem leadership have firmly established GPU-based servers as the backbone of the U.S. AI server industry.

Generative AI and Large Language Models (LLMs)

The rise of generative AI and LLMs represents one of the most powerful growth engines for the U.S. AI server market. Training and fine-tuning large models require enormous parallel compute capacity, high-bandwidth memory, and ultra-fast interconnects—capabilities best delivered by specialized AI servers.

Enterprises are increasingly customizing foundation models using proprietary datasets, shifting workloads from public clouds to dedicated or hybrid environments. This trend directly drives sales of on-premises and private AI server clusters. Inference workloads are also scaling rapidly as LLMs are embedded into productivity tools, customer service platforms, software development pipelines, and content creation workflows.

In September 2022, NVIDIA launched the NVIDIA NeMo LLM Service and NVIDIA BioNeMo LLM Service, enabling developers to adapt large language models for applications such as text generation, chatbots, code development, and biomolecular research. These innovations further accelerated enterprise-level adoption of AI servers across the United States.

Government, Regulatory, and Security Considerations

Government policy, regulatory requirements, and security concerns are indirectly accelerating AI server deployment in the United States. Sensitive workloads involving national security, healthcare data, financial records, and intellectual property often cannot rely exclusively on foreign or multi-tenant public cloud environments. As a result, organizations increasingly invest in on-premises or sovereign AI server infrastructure.

Heightened regulations around data residency, AI transparency, and model governance encourage the use of dedicated AI servers that offer greater control, auditability, and compliance. Additionally, cybersecurity mandates are driving investments in AI-powered threat detection and security analytics, which rely on high-performance compute platforms.

In December 2025, the U.S. government and allied nations published guidance outlining principles for safely integrating AI into critical infrastructure, reinforcing the need for secure, resilient, and well-governed AI computing environments.

Challenges in the United States AI Server Market

High Capital and Operating Costs

Despite strong momentum, high capital and operational costs remain a major challenge in the U.S. AI server market. Advanced GPU-based and custom-accelerator AI systems involve substantial upfront investments, often extending beyond servers to include networking, storage, power distribution, and cooling infrastructure.

AI servers consume significantly more power and generate more heat than traditional servers, resulting in higher electricity costs and complex cooling requirements. Data centers may require major facility upgrades, including advanced cooling systems, reinforced power delivery, and redundancy infrastructure, which increases deployment timelines and total cost of ownership.

Skills Gaps and Integration Complexity

The deployment and operation of AI servers require specialized skills in machine learning frameworks, distributed computing, container orchestration, and high-performance networking. Skilled professionals in these areas are scarce and costly, creating barriers for many organizations.

Integrating AI servers into legacy IT environments also presents challenges. Existing data pipelines, storage systems, and security architectures are often not designed for AI-intensive workloads, leading to underutilized infrastructure or extended implementation timelines that reduce return on investment.

United States GPU-Based AI Server Market

GPU-based AI servers represent the dominant segment of the U.S. AI server market. GPUs are the preferred accelerators for deep learning and generative AI due to their massively parallel architecture and mature software ecosystem. Hyperscalers, SaaS providers, and enterprises widely standardize on GPU-accelerated platforms to support frameworks such as PyTorch and TensorFlow.

Continuous innovation in GPU architectures, memory bandwidth, and interconnect technologies ensures that GPU-based servers remain the primary foundation of AI infrastructure in the United States.

United States ASIC-Based AI Server Market

ASIC-based AI servers are emerging as a targeted, high-efficiency alternative to general-purpose GPUs. Designed to accelerate specific AI workloads—such as inference, recommendation engines, or video analytics—ASICs offer superior performance-per-watt and lower total cost of ownership for predictable, large-scale deployments.

Major cloud providers and internet companies are early adopters of ASIC-based servers, often developing custom chips tailored to their platforms. This trend signals increasing diversification of AI server architectures in the U.S. market.

Cooling Technology Trends in the United States AI Server Market

Air Cooling AI Servers

Air cooling remains the most widely deployed thermal management solution, particularly in existing U.S. data centers. Familiar infrastructure, standard rack designs, and operational simplicity make air-cooled AI servers easier to integrate at moderate rack densities.

Hybrid Cooling AI Servers

Hybrid cooling solutions that combine air and liquid cooling are gaining rapid adoption as AI workloads increase rack power densities. By cooling the most heat-intensive components with liquid while using air cooling elsewhere, data centers can support 30–80 kW per rack and beyond without complete infrastructure overhauls.

United States AI Server Form Factor Analysis

AI Blade Server Market

AI blade servers deliver dense, modular compute in chassis-based systems. Shared power, cooling, and networking reduce cabling complexity and improve manageability, making blade servers attractive for enterprise data centers and campus environments with space constraints.

AI Tower Server Market

AI tower servers cater to edge locations, branch offices, and small enterprises that require localized AI inference without full data center infrastructure. These systems support use cases such as video analytics, real-time inspection, and localized decision-making.

End-Use Industry Analysis

BFSI AI Server Market

In the U.S. BFSI sector, AI servers support fraud detection, algorithmic trading, credit scoring, risk modeling, and customer personalization. Strict regulatory oversight and low-latency requirements drive investments in secure, on-premises AI server infrastructure.

Healthcare & Pharmaceutical AI Server Market

Healthcare and pharmaceutical organizations deploy AI servers for medical imaging, genomics, drug discovery, and clinical decision support. Large, sensitive datasets and regulatory requirements make private and hybrid AI infrastructure essential.

Automotive AI Server Market

The automotive industry relies on AI servers throughout the vehicle lifecycle, from autonomous driving R&D to manufacturing optimization and connected services. Large GPU clusters are critical for training perception and control models for ADAS and autonomous vehicles.

State-Wise United States AI Server Market Analysis

California AI Server Market

California is the epicenter of the U.S. AI server market due to its concentration of hyperscalers, AI startups, and semiconductor companies. In October 2025, Super Micro Computer, Inc. launched a new subsidiary to provide AI server solutions to U.S. federal agencies, with manufacturing and testing based in Silicon Valley.

New York AI Server Market

New York’s AI server demand is driven by financial institutions deploying AI for trading, fraud detection, and compliance. Low-latency colocation facilities near financial exchanges make local AI clusters strategically important.

Texas AI Server Market

Texas is rapidly emerging as a major AI server hub due to favorable energy economics, abundant land, and large-scale data center development. Enterprises across energy, manufacturing, logistics, and healthcare drive strong regional demand.

Market Segmentation

By Type

- GPU-based Servers

- FPGA-based Servers

- ASIC-based Servers

By Cooling Technology

- Air Cooling

- Liquid Cooling

- Hybrid Cooling

By Form Factor

- Rack-mounted Servers

- Blade Servers

- Tower Servers

By End Use

- IT & Telecommunication

- BFSI

- Retail & E-commerce

- Healthcare & Pharmaceutical

- Automotive

- Others

By Top States

California, Texas, New York, Florida, Illinois, Pennsylvania, Ohio, Georgia, New Jersey, Washington, North Carolina, Massachusetts, Virginia, Michigan, Maryland, Colorado, Tennessee, Indiana, Arizona, Minnesota, Wisconsin, Missouri, Connecticut, South Carolina, Oregon, Louisiana, Alabama, Kentucky, Rest of United States

Competitive Landscape and Company Analysis

The U.S. AI server market is highly competitive and innovation-driven. Key players include:

- Dell Inc.

- Cisco Systems, Inc.

- IBM Corporation

- HP Development Company, L.P.

- Huawei Technologies Co., Ltd.

- NVIDIA Corporation

- Fujitsu Limited

- ADLINK Technology Inc.

- Lenovo Group Limited

- Super Micro Computer, Inc.

Each company is analyzed across five viewpoints: overview, key personnel, recent developments, SWOT analysis, and revenue analysis.

Discover Modern Comfort: A Guide to Apartment Rent in Beirut

Beirut, the lively capital of Lebanon, is a city that blends deep-rooted history with a mo…